Course Study with CSC321

[2021.06.05]

Topic : Lecture 14: Recurrent Neural Networks (Hayden,James)

Notes :

- https://drive.google.com/file/d/1qP1_SBwEeFE8CQ0T_Pd9veb2H6JEVRbP/view?usp=sharing [Hayden]

- https://drive.google.com/file/d/1otSFBwYQcOD1dgZqup5ZhQkvX1HIalIW/view?usp=sharing [James]

Links :

- https://www.cs.toronto.edu/~rgrosse/courses/csc321_2017/readings/L14%20Recurrent%20Neural%20Nets.pdf (CSC321, EN)

- https://blog.naver.com/PostView.nhn?blogId=winddori2002&logNo=221974391796 (RNN computation flow, KR)

- https://curt-park.github.io/2017-03-26/yolo/ (computation flow, KR)

- http://bigdata.dongguk.ac.kr/lectures/TextMining/_book/%EC%96%B8%EC%96%B4-%EB%AA%A8%EB%8D%B8language-model.html (Language Modeling, KR)

- https://gruuuuu.github.io/machine-learning/lstm-doc/ (why tanh is used, not sigmoid nor relu ?, KR)

Covered through study

- 1.Introduction

- Tasks predicting ‘sequences’

- Neural Language Model to RNN

- 2.Recurrent Neural Nets

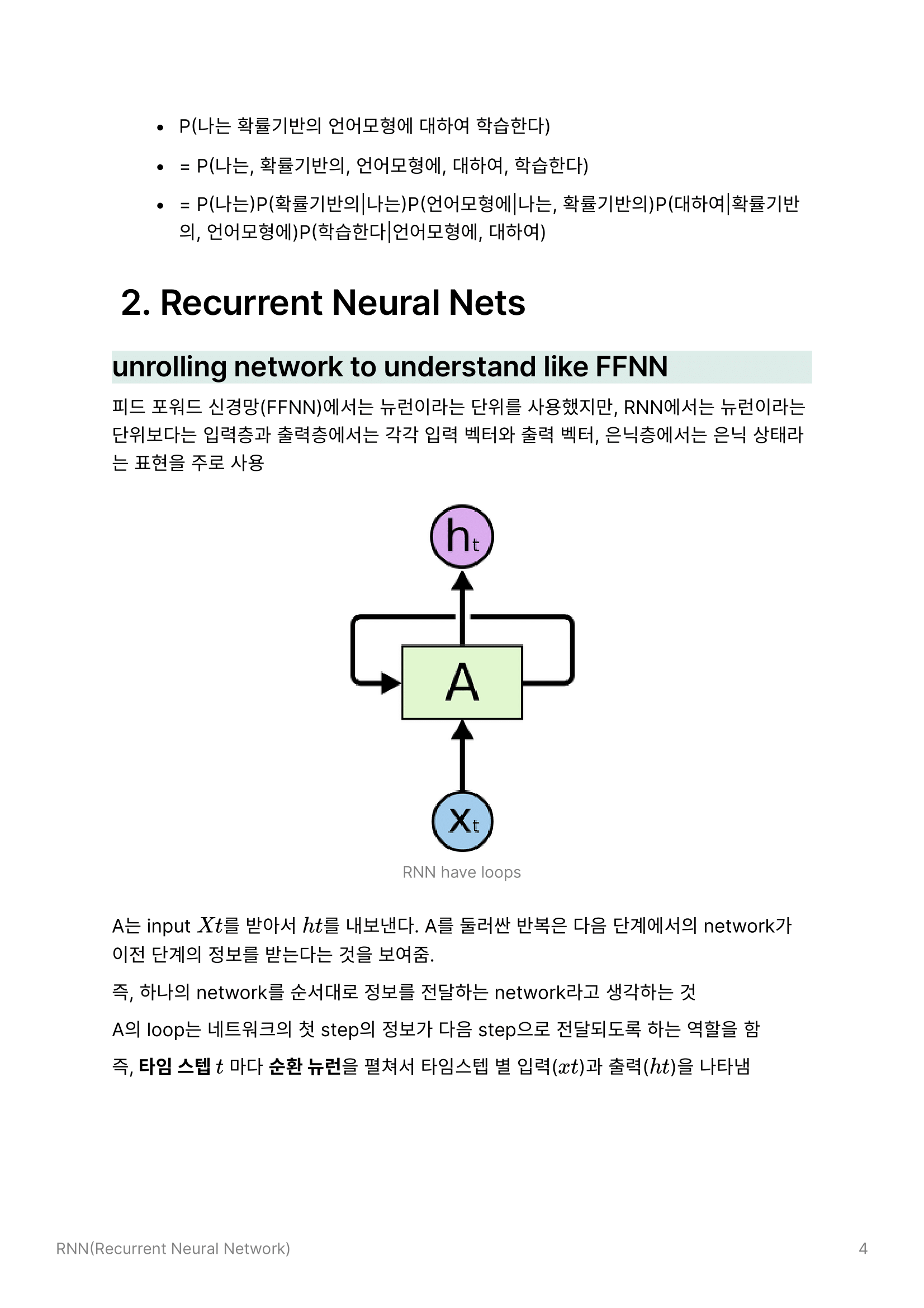

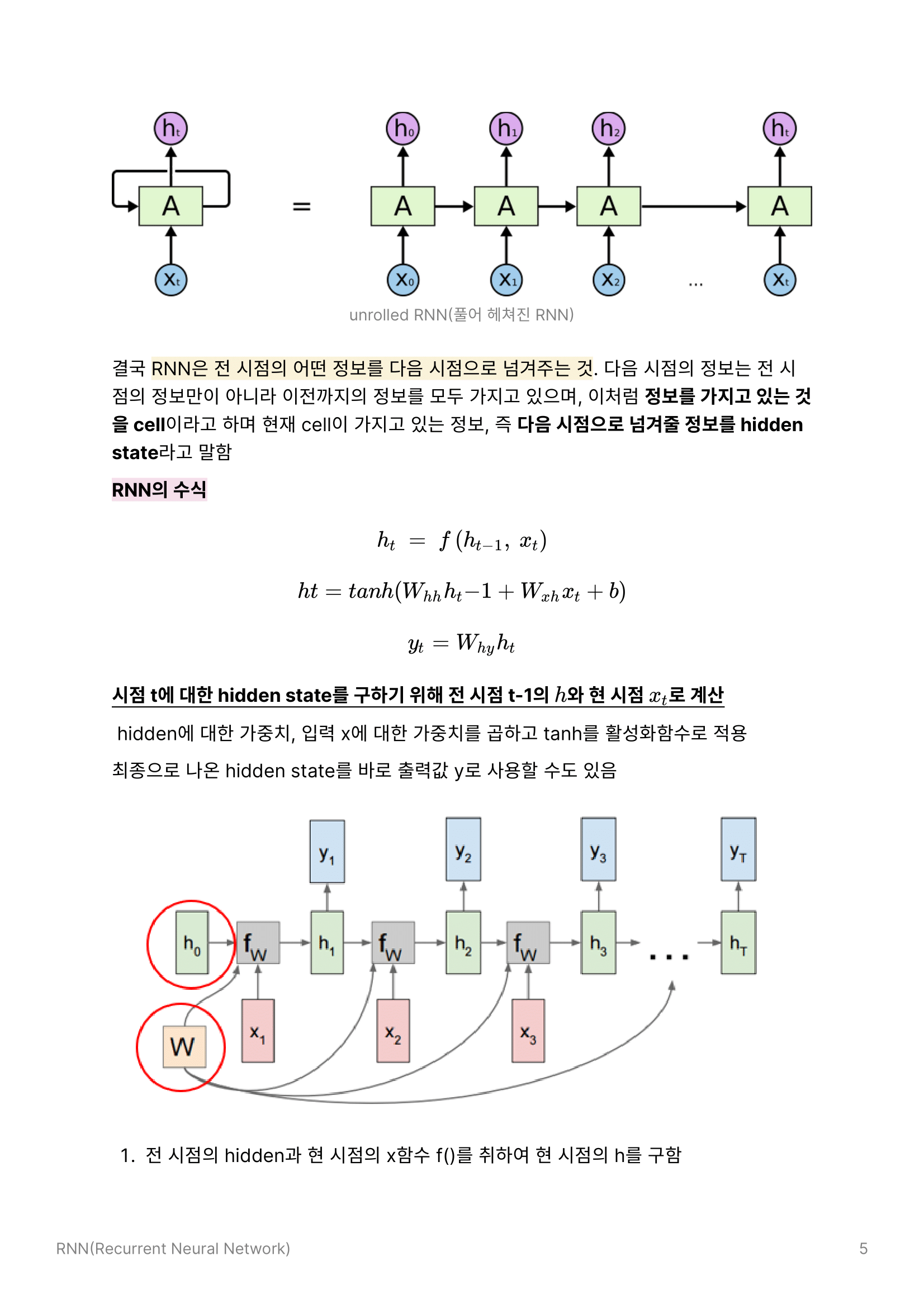

- unrolling network to understand like FFNN

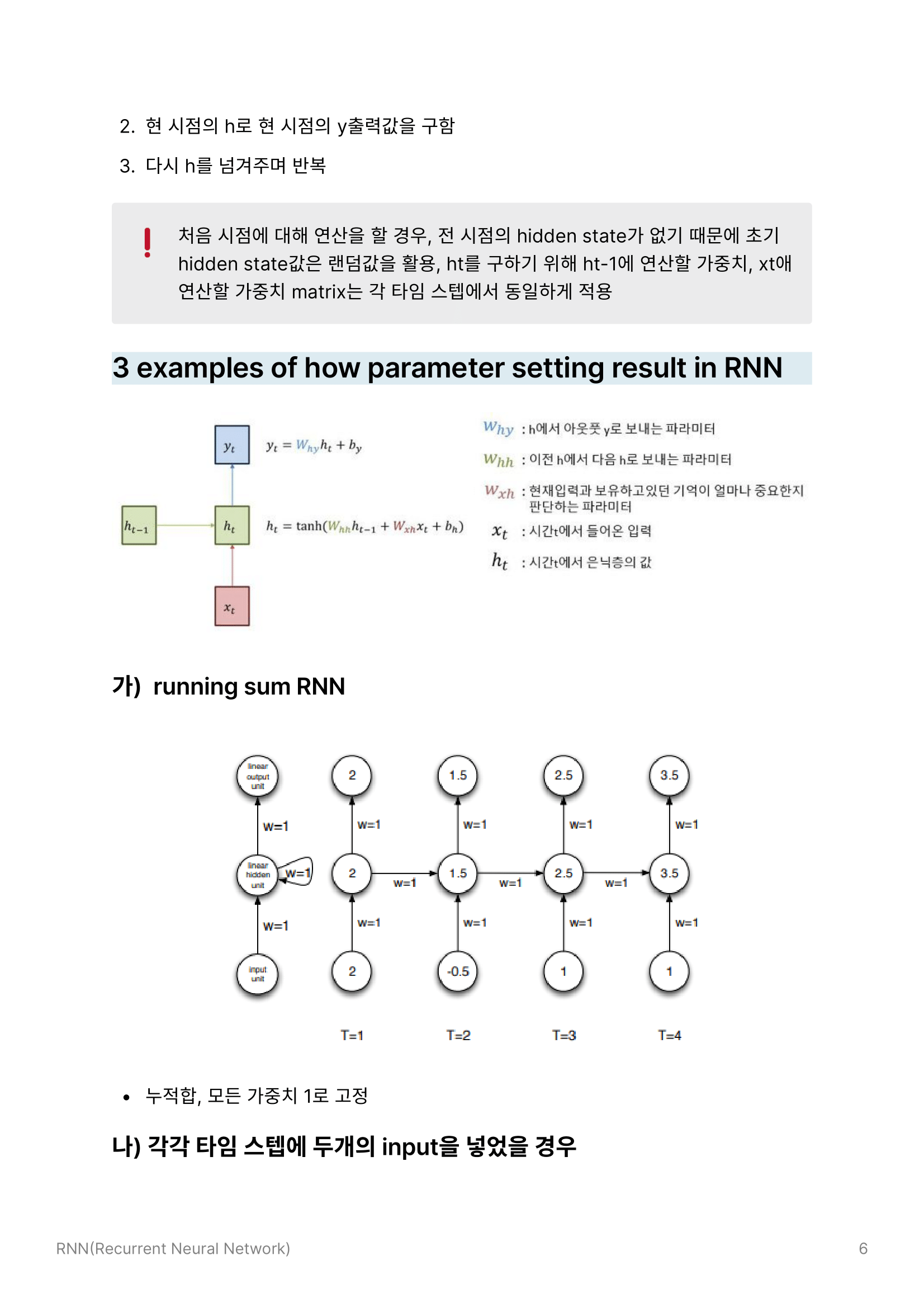

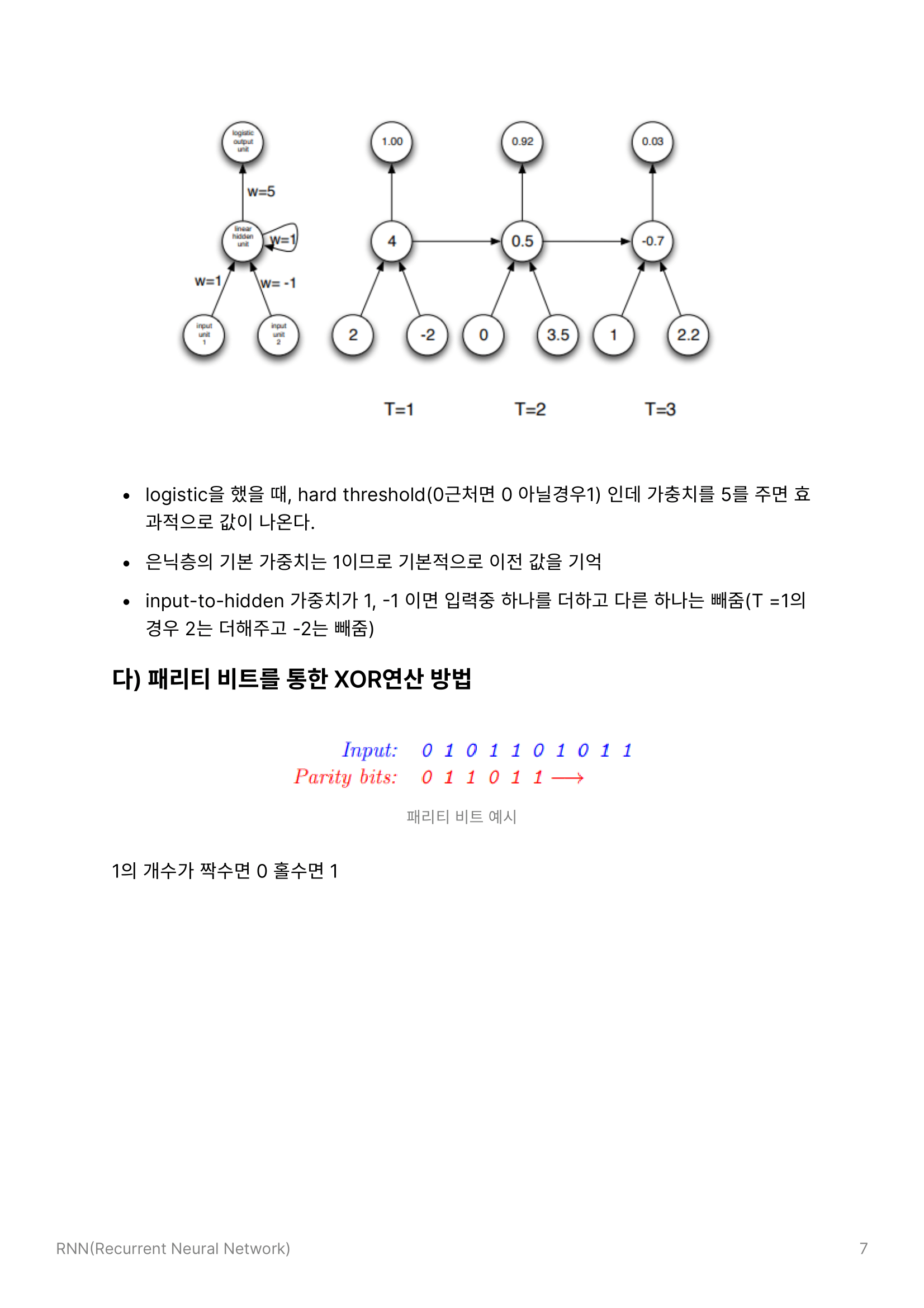

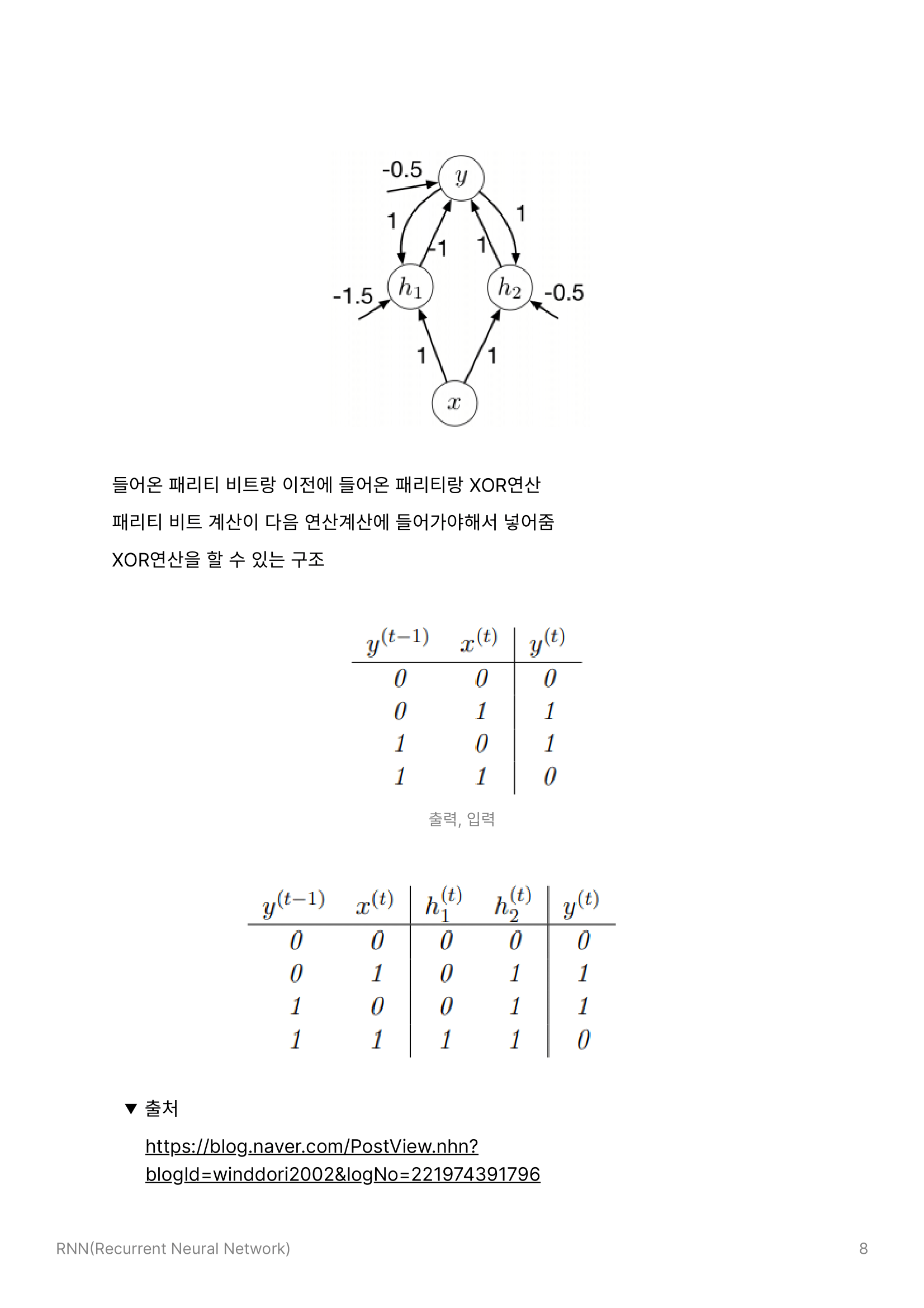

- 3 examples of how parameter setting result in RNN

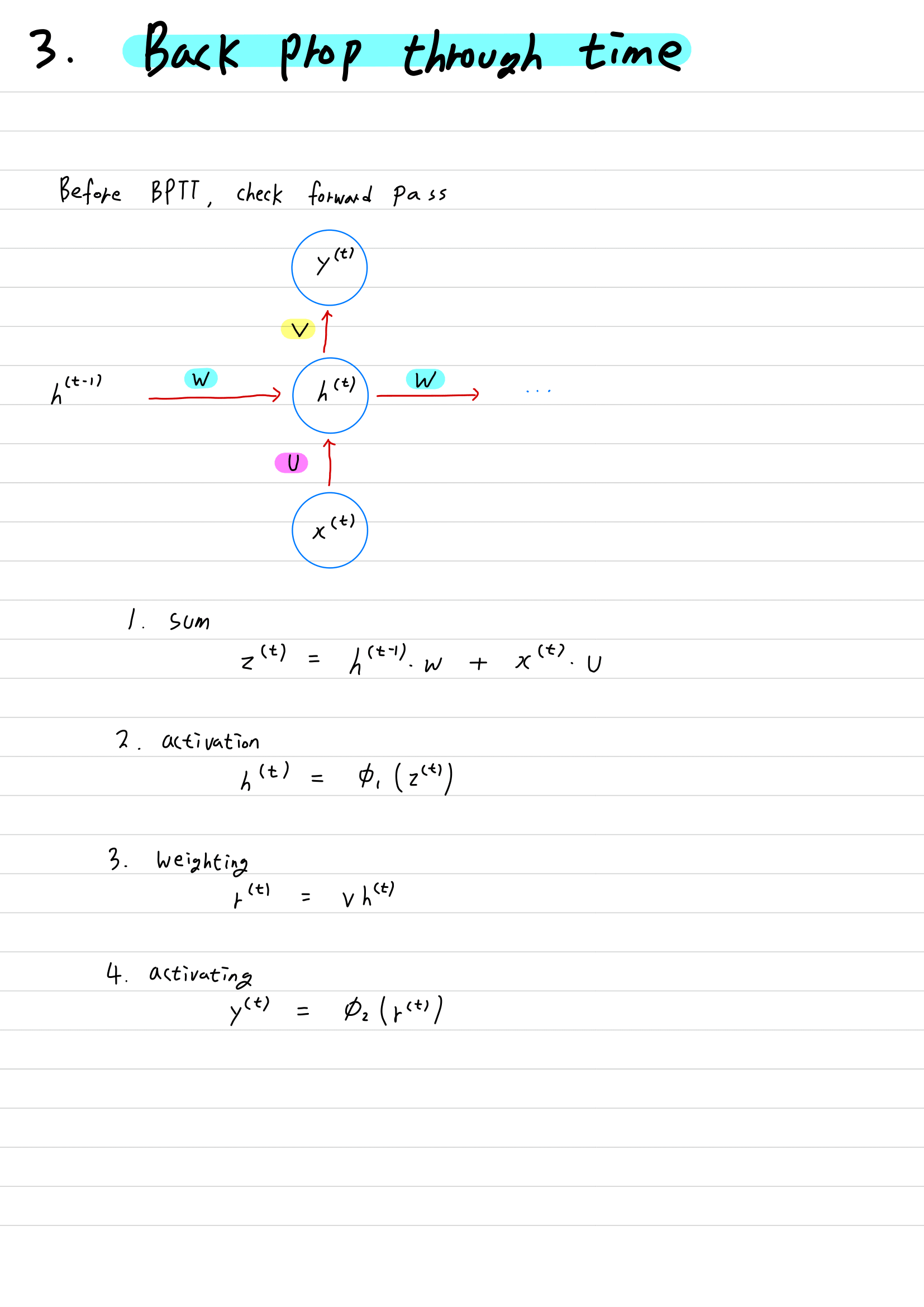

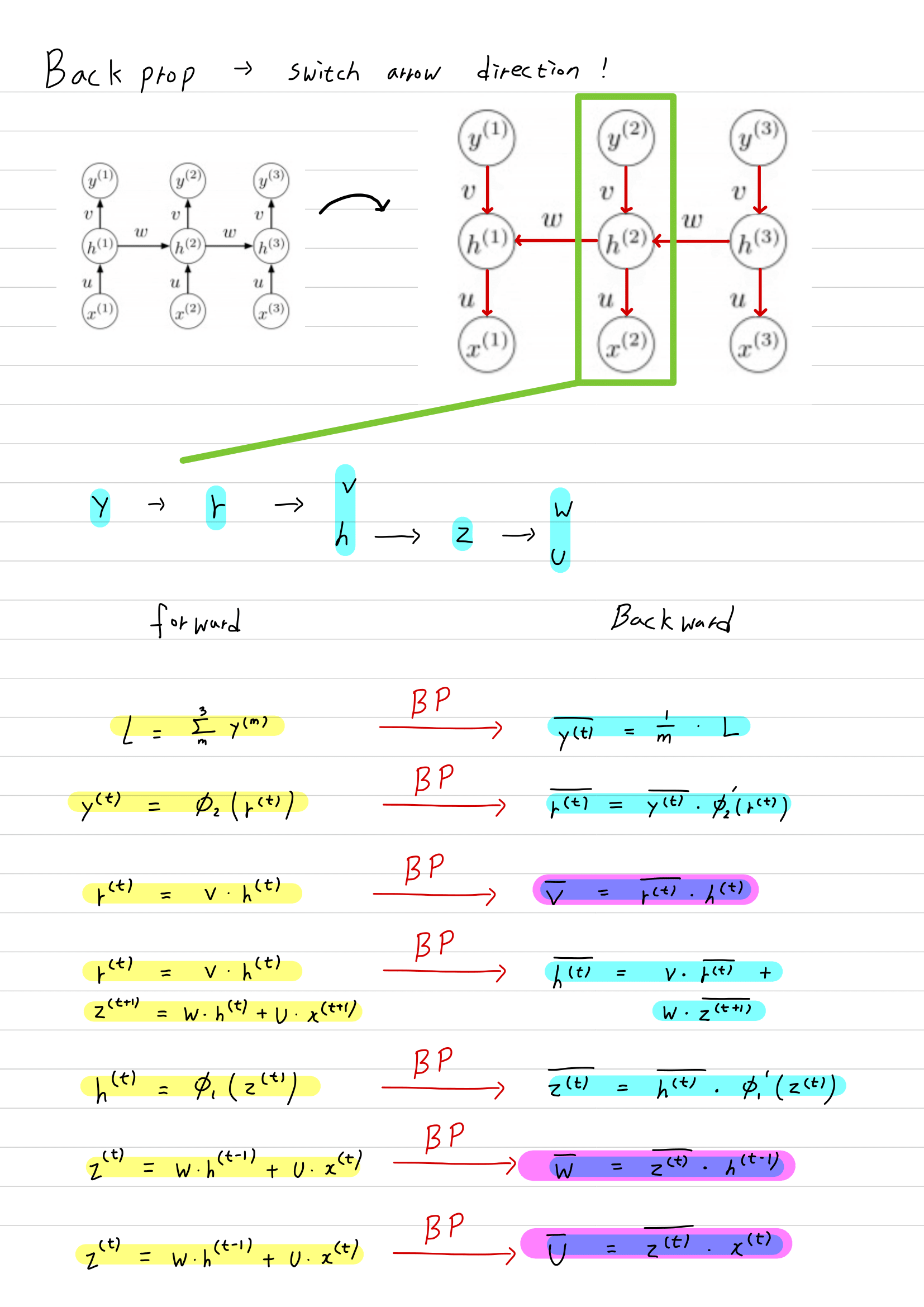

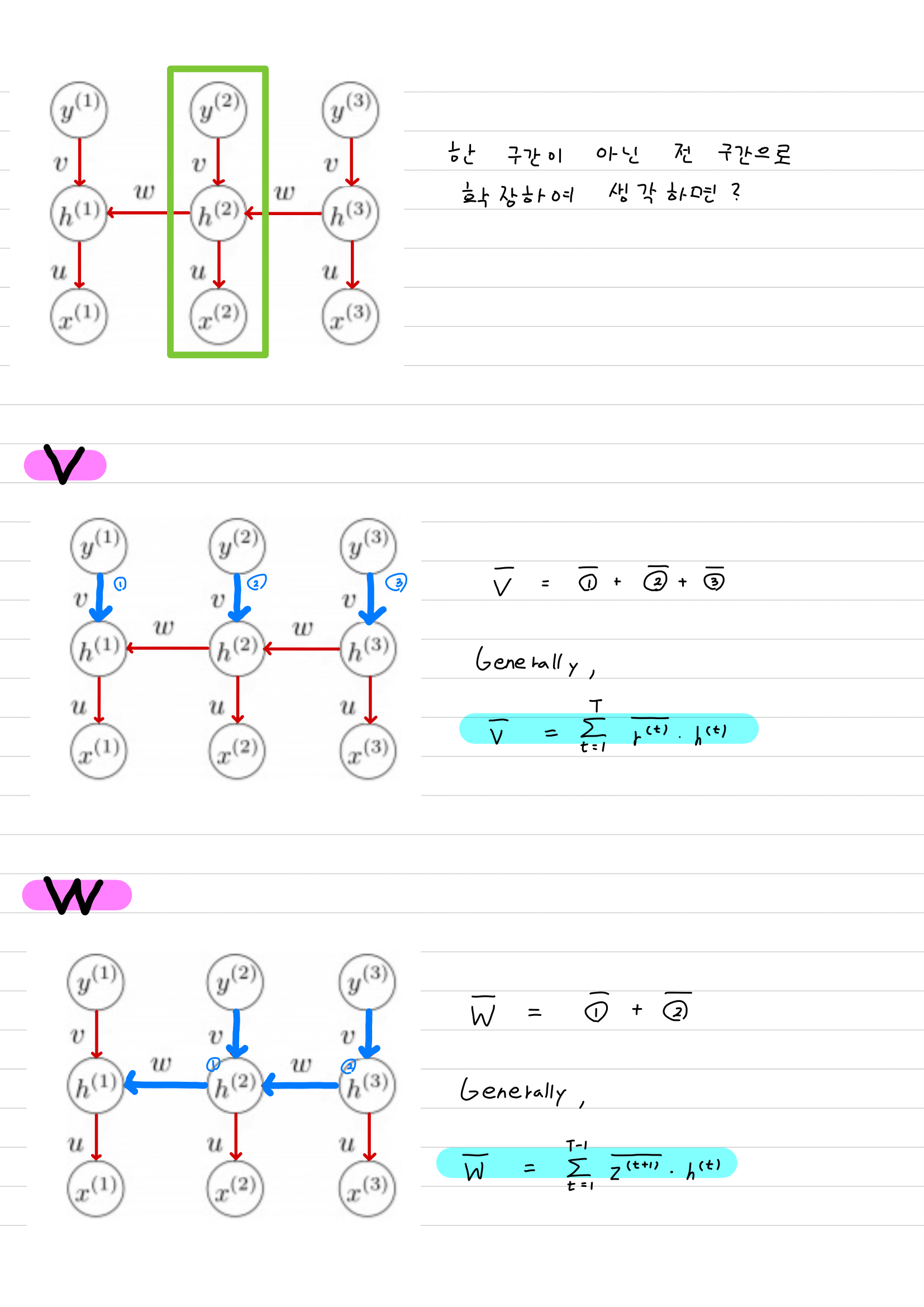

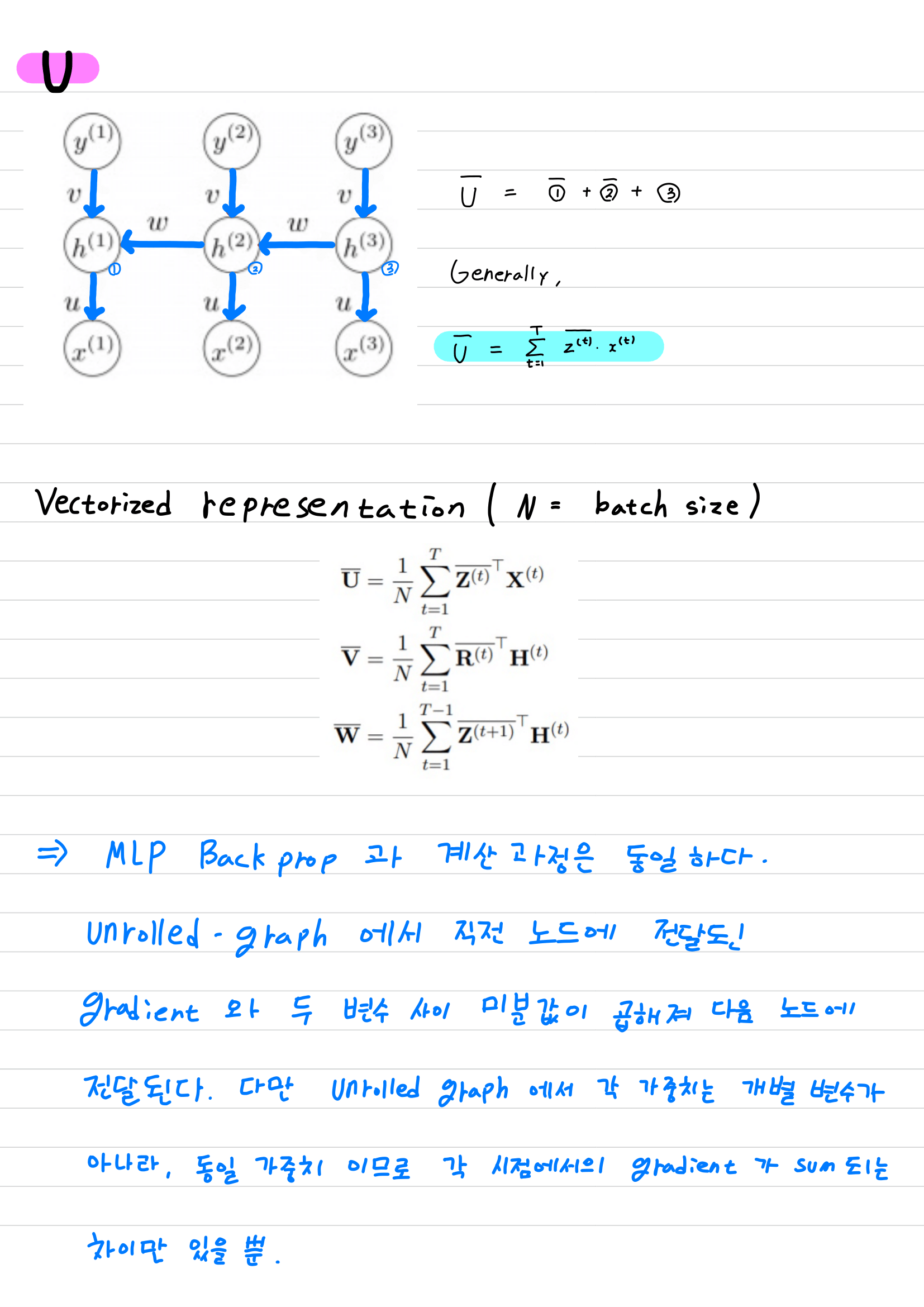

- 3.Backprop Through Time

- View as MLP backprop with unrolled computation-graph

- Comparing with MLP backprop

- 4.Sequence Modeling (what tasks can RNN be applied)

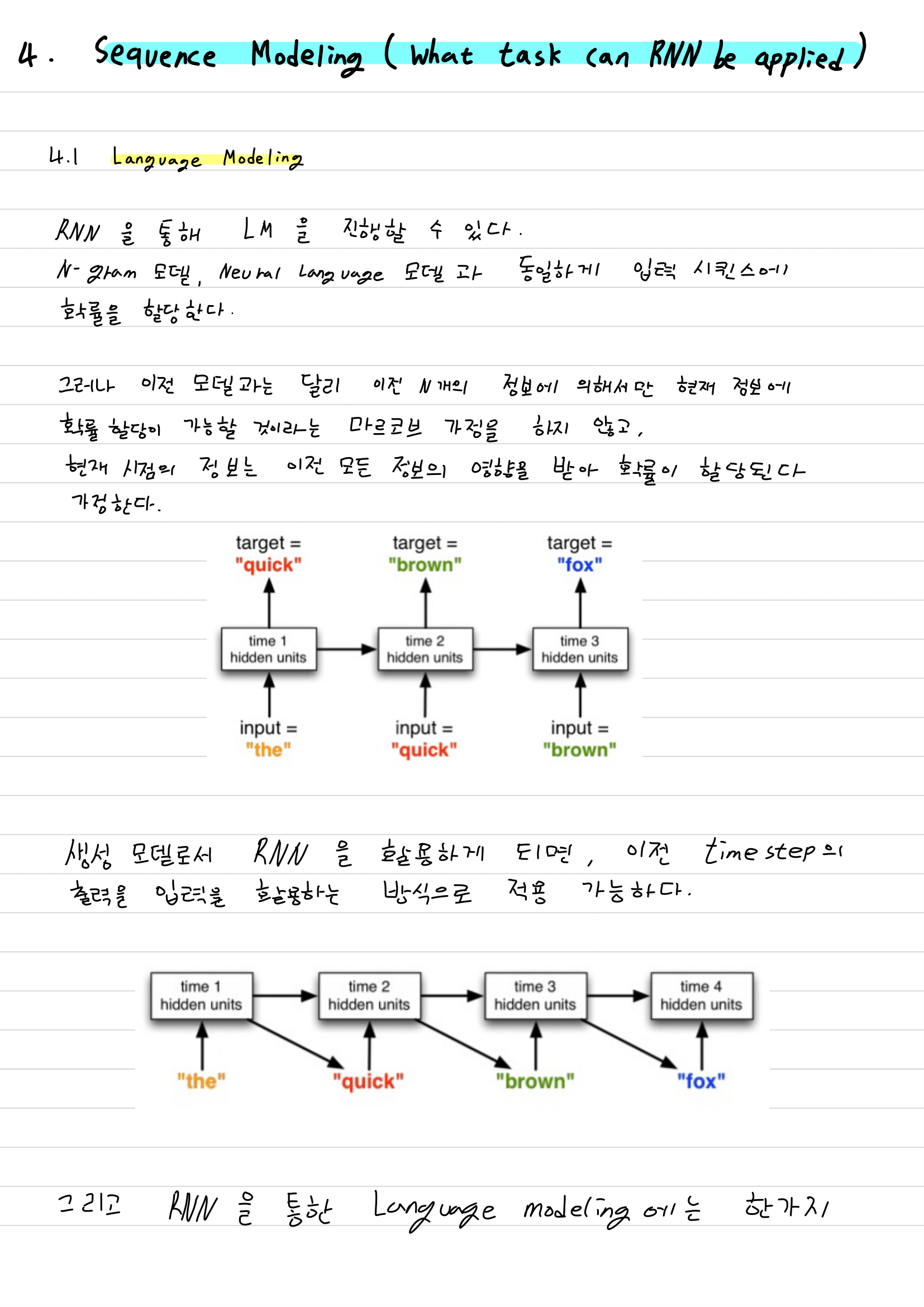

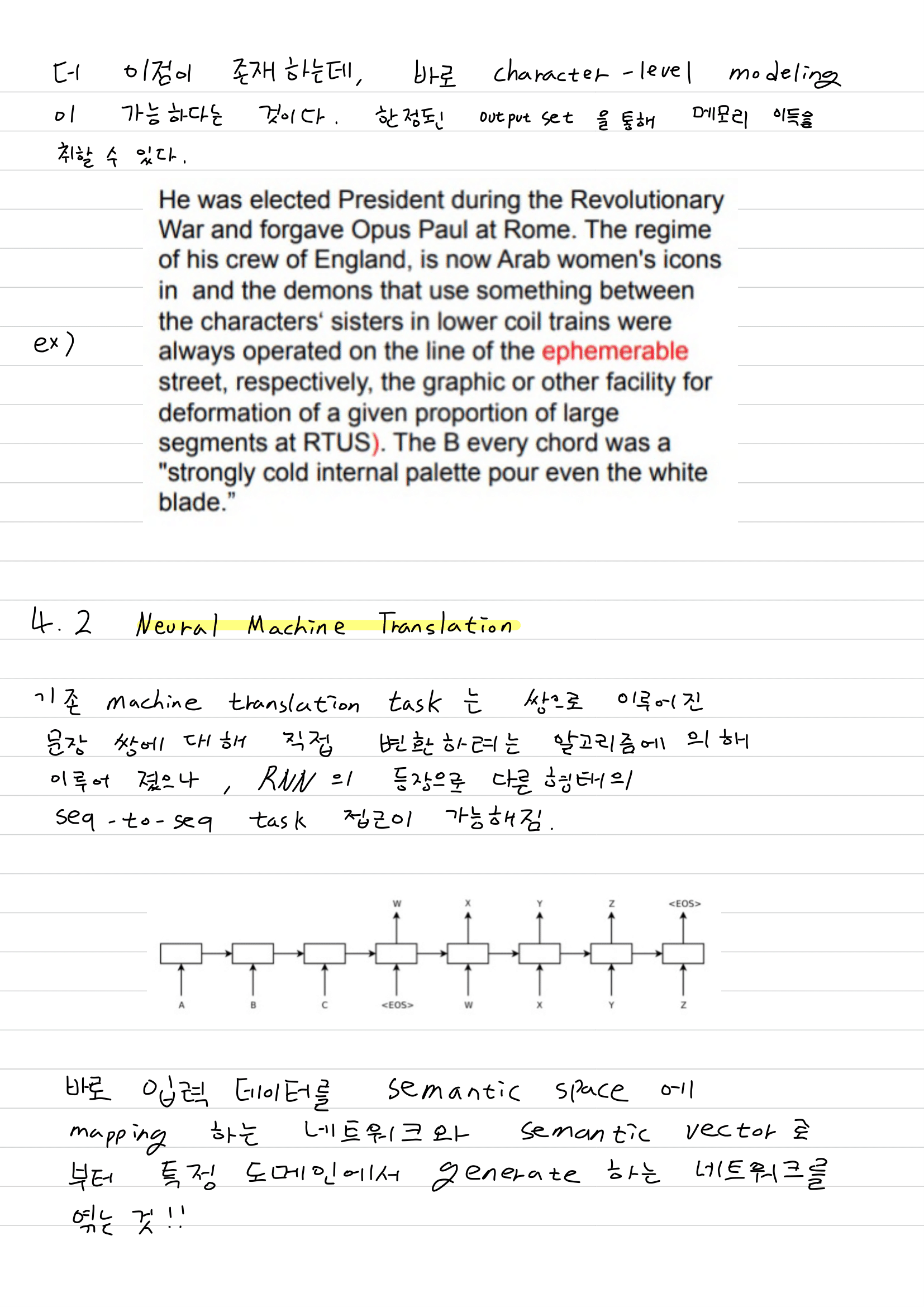

- Language Modeling

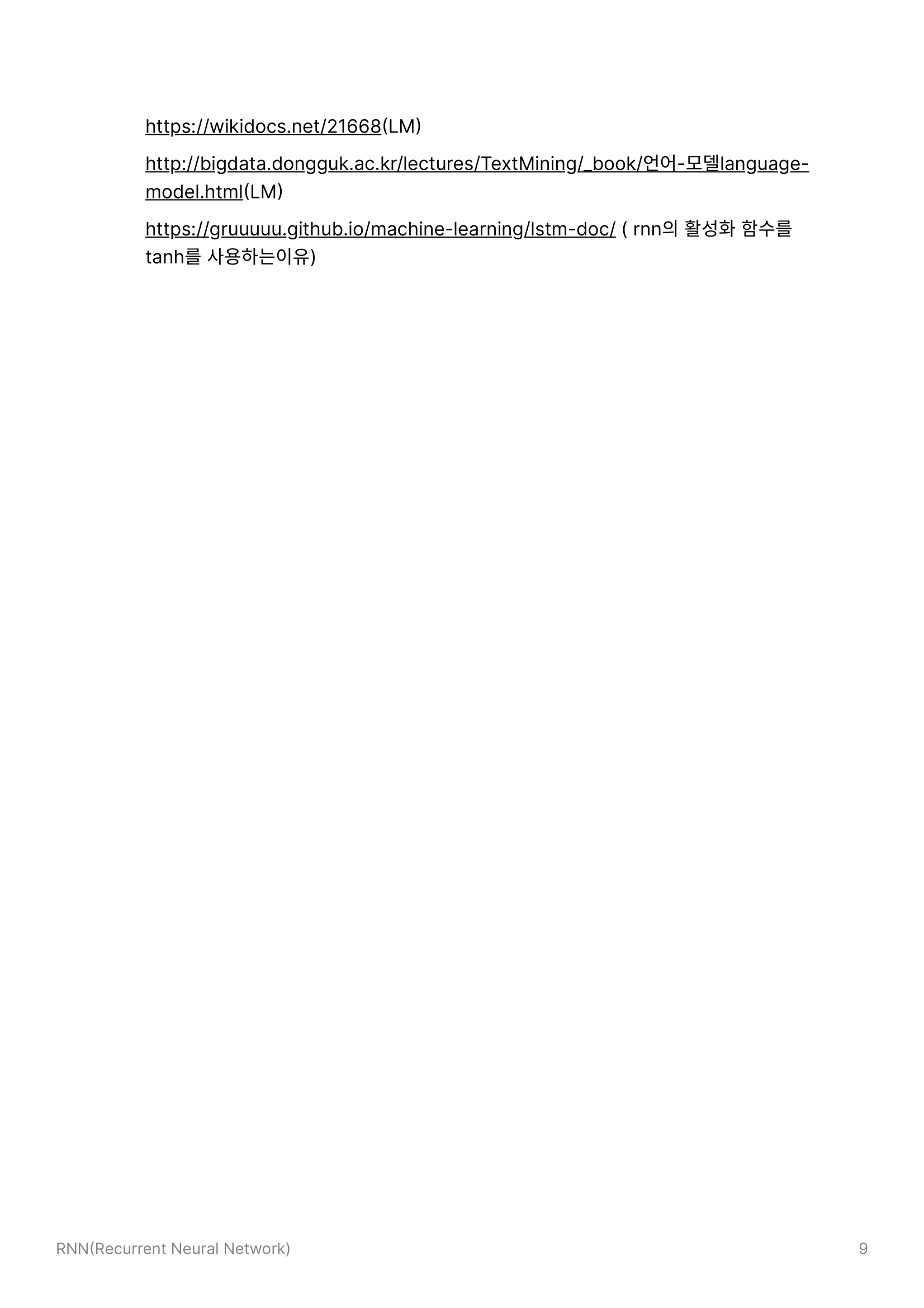

- Neural Machine Translation

Learning to Execute Programs(removed)

Recurrent Neural Networks